A Child is Born!

I’ve always found it rather irksome that although I teach the 4 pillars of building physics: lighting, thermal, ventilation and acoustics, the VI-Suite could never do the last one. Well, no more. Thanks to the people behind the excellent pyroomacoustics, a Python library for room acoustics simulation, I have given birth to AuVi, my fourth and hopefully last child.

AuVi provides two additional nodes: AuVi Simulate, which sits in the VI-Suite simulation nodes menu, and AuVi Convolve, which sits in the VI-Suite Output nodes menu. I have included details in the updated

user manual.

AuVi Simulate takes room geometry and materiality defined in Blender and simulates reverberation times and impulse responses for that space. AuVi specific material characteristics are defined in the Blender material panel as usual with the AuVi Material option. Absorption and scatter coefficients are required, which can be entered manually or read from the built-in database. It is not yet possible to write custom entries to the database as you can with EnVi. That will hopefully come later.

Pyroomacoustics can use a combined image (for early reflections) and raytracing (for late reflections) technique, and the options within this node can control the number of image iterations, and the number of rays cast, as well as the radius of receiving points.

These receiving points, and source points, can be positioned with Blender empties within the model. These empties are then given either a source or listener AuVi property within the VI-Suite Object menu. In addition, listener points can also be set by, currently, using the same method as specifying LiVi sensor points: a mesh with a ’Light sensor’ material type will create a listener at the centre of each face.

When simulating on Linux and OS X, a Kivy window will appear but it is not possible to monitor the progress of the simulation and the window only provides a cancel button. On Windows, the Blender interface will simply lock until the calculation is completed, so be careful with the simulation parameters as they can lead to long simulation times. Image iterations > 3 on complex models for example can take a very long time. Start low and increment upwards is my advice.

One the simulation is finished reverberation times (RT60) are stored in the node for each source-listener pair. These can be viewed in the VI Metrics node. Also, impulse responses are stored for each source listener pair, where the listener is defined by an empty. These can be plotted with the VI-Chart node. Both can be exported with the VI CSV node.

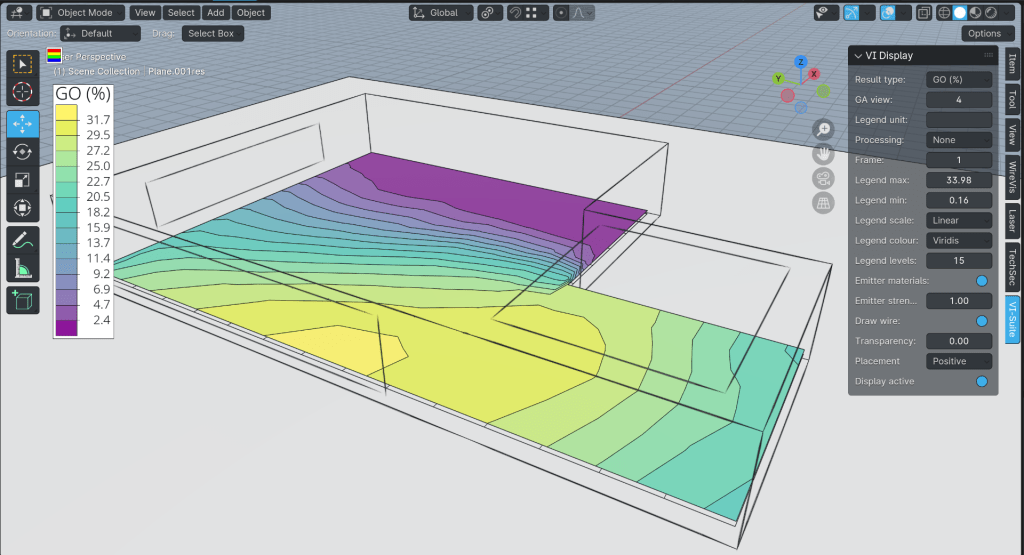

An AuVi Display button will appear in the VI Display panel, and this will plot out the reverberation times for each mesh based listener point in a similar way to LiVi, and the result geometry is stored in the LiVi Results collection.

IRs can also be used to generate how the space would actually sound with the AuVi Convolve node. Connecting the IR output socket of the AuVi Simulation node to the IR input socket of the AuVi Convolve node will allow you to select an anechoic sound sample. Some sources of anechoic sound files are listed below. Any sound file loaded must be in WAV format, and preferably at a sample rate of 16kHz, although AuVi will attempt to resample the audio file if it isn’t. Once a sound file has been selected a Play button will appear to play the audio.

A desired source/listener IR can then be selected and the Convolve button pressed to do the convolution of the original WAV file with the IR. Another Play button is then exposed to play the convolved audio, and a Save button will save the convolved audio to hard disk.

There are a couple of new dependencies that need to be installed for pyroomacoustics, which means the automatic installation of required dependencies has been updated and a complete reinstall of the VI-Suite will be required.

Per usual, there is a video below to go through the basic steps.

In other news, the VI-Suite now works with Numpy 2.0, so those on rolling Linux distributions such as Arch should now not encounter matplotlib problems.

Anechoic sound sources: